Keywords:

Multicentre study, Not applicable, Retrospective, Dementia, Computer Applications-General, MR, Neuroradiology brain, Computer applications, Artificial Intelligence, Artificial Intelligence and Machine Learning

Authors:

S. Kaliyugarasan, A. Lundervold, A. S. Lundervold; Bergen/NO

DOI:

10.26044/ecr2020/C-05555

Methods and materials

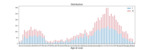

To train our model we used a total of 7462 T1-weighted images from 4355 healthy subjects sourced from eight publicly available data sets: ADNI [2], CC-359 [3], HCP Young Adult [4], IXI [5], OASIS [6], PPMI [7], SALD [8] and YALE [9] (ages 20-90 yrs, mean 61.08, m/f = 1992/2363, Fig. 2). To ensure generalization and robustness of our approach, we evaluated our model on completely independent data: 473 T1-weighted MR images from 267 healthy elderly subjects from the AIBL data set [10] (ages 60-85 yrs, mean 72.38, m/f = 116/151, Fig. 3).

We performed minimal pre-processing of the DICOM images, ensuring that our model is very fast (2s of preprocessing per volume), with little loss of potentially useful information in the images: converting from DICOM to NIfTI, reslicing to 256x256x256 matrices using trilinear interpolation, and fast deep learning-based skull-stripping (to obtain “brain age”, not “head age”).

These were inputs to our 3D convolutional neural network (CNN) model, tailor-made with residual connections and adaptive average pooling, constructed using the PyTorch deep learning framework. The layers are divided into two different layer groups, enabling the use of different learning rates for each group (i.e. discriminative learning rates), and eases the re-use of trained weights from the early layers for other tasks (i.e. transfer learning).

We extended the fastai library built on top of PyTorch to 3D MR images by constructing new data loaders, data augmentation capabilities, and enabled the use of custom 3D CNNs while still supporting the highly impactful training techniques of fastai, including the learning rate finder to find the optimum learning rate and the one-cycle policy (e.g., learning rate changes during the training, related to what is called superconvergence).